Dr. V.K. Maheshwari, Former Principal

K.L.D.A.V (P.G) College, Roorkee, India

Operant conditioning was developed by Burrhus Friederich Skinner (1904–1990), a psychologist at Harvard University, in 1938. Operant conditioning was coined by B.F. Skinner that is why one may occasionally hear it referred as Skinnerian conditioning. Skinner believed that the best way to understand behaviour is to look at the causes of an action and its consequences

Beginning in the 1930’s, Skinner started his experimentation on the behaviour of animals. Skinner’s quest was to observe the relationship between observable stimuli and response. Essentially, he wanted to know why these animals behaved the way that they do.

Definition of Operant Conditioning:

Operant conditioning refers to a kind of learning process where a response is made more probable or more frequent by reinforcement. It helps in the learning of operant behaviour, the behaviour that is not necessarily associated with a known stimulus.

Operant conditioning (sometimes referred to as instrumental conditioning) is a method of learning that occurs through rewards and punishments for behaviour. Through these rewards and punishments, an association is made between a behaviour and a consequence for that behaviour.

Operant conditioning is defined as the use of consequences to modify the occurrence and form of behaviour. “To put it very simply, behaviour that is followed by pleasant consequences tends to be repeated and thus learned. Behaviour that is followed by unpleasant consequences tends not to be repeated and thus not learned” (Alberto & Troutman, 2006, p. 12). Operant conditioning is specifically limited to voluntary behaviour, that is, emitted responses, which distinguishes it from respondent or Pavlovian conditioning, which is limited to reflexive behaviour (or elicited responses).

Operant Conditioning is a type of learning in which a behaviour is strengthened (meaning, it will occur more frequently) when it’s followed by reinforcement, and weakened (will happen less frequently) when followed by punishment. Operant conditioning is based on a simple premise – that behaviour is influenced by the consequences that follow. When you are reinforced for doing something, you’re more likely to do it again. When you are punished for doing something, you are less likely to do it again.

Skinner’s Operant Conditioning

Skinner used the term operant to refer to any “active behaviour that operates upon the environment to generate consequences”. In other words, Skinner’s theory explained how we acquire the range of learned behaviours we exhibit each and every day.

Skinner considers an operant as an act which constitutes an organisms doing something and Operant conditioning can be defined as a type of learning in which voluntary (controllable; non-reflexive) behaviour is strengthened if it is reinforced and weakened if it is punished (or not reinforced).

Operant Conditioning recognizes the differences between elicited responses and emitted responses. The former are responses associated with a particular stimulus, and the latter are responses that act on the environment to produce different kinds of consequences that affect the organism and alter future behaviour. . As a behaviourist, Skinner believed that internal thoughts and motivations could not be used to explain behaviour. Instead, he suggested, we should look only at the external, observable causes of human behaviour.

Skinner’s research focuses on the manipulation of the consequences of an organism’s behaviour and its effect on subsequent behaviour. Learning can be understood by a basic S (Discriminative Stimulus)-R (Operant Response, the behaviour)-S (Contingent Stimulus, the reinforcing stimulus) relationship. The change in behaviour is operated by the contingencies of reinforcement. A reinforcing event is any behavioural consequence that strengthens behaviour.

Fundamental Experiment

Operant Conditioning, or Instrumental Conditioning, is the method of teaching associations between behaviours and the behaviour’s consequences, thereby strengthening or weakening the behaviours. Strengthened behaviours are those that have high probability of re-occurrence, while weakened behaviours are those that have low probability of re-occurrence. Operant conditioning involves voluntary responses (operant behaviour), whereas classical conditioning involves involuntary responses (respondent behaviour).

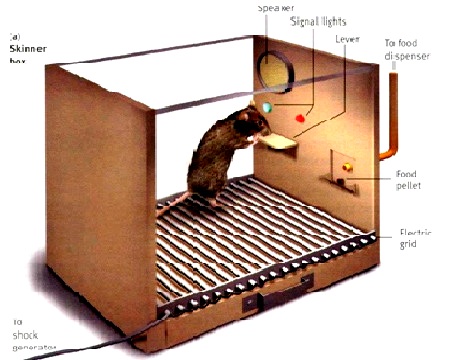

Prior to the work of Skinner, instrumental learning was typically studied using a maze or a puzzle box. Learning in these settings is better suited to examining discrete trials or episodes of behavior, instead of the continuous stream of behavior. The Skinner box is an experimental environment that is better suited to examine the more natural flow of behavior. (The Skinner box is also referred to as an operant conditioning chamber.)

It was B.F. Skinner, who expanded Thorndike’s law of effect. Much of the principles of operant conditioning known and used today came from Skinner’s extensive research experiments A Skinner Box is a often small chamber that is used to conduct operant conditioning research with animals. Within the chamber, there is usually a lever (for rats) or a key (for pigeons) that an individual animal can operate to obtain a food or water within the chamber as a reinforcer. The chamber is connected to electronic equipment that records the animal’s lever pressing or key pecking, thus allowing for the precise quantification of behaviour. . For example, he was able to successfully teach pigeons to guide missile direction. His pigeons constantly poked on a dot found on the screen to keep the missile on track while being fed with food pellets. Although Skinner’s offer to use the pigeons to serve during _World War I was rejected by the US Navy officials, the experiment showed the success behind operant conditioning. For example, he was able to successfully teach pigeons to guide missile direction. His pigeons constantly poked on a dot found on the screen to keep the missile on track while being fed with food pellets. Another good example is the Skinner box, where a rat is taught to press the lever to get food.

Skinner’s method of conditioning went like this: random pellets were thrown to the tray to accustom the rat, then the lever is installed, and the rat gets food pellets whenever it occasionally presses on the lever. The reason why the Skinner box is named after Skinner is because Skinner subsequently improved the box to increase the degree of control he has over the experimental setting. He installed devices to precisely measure activity inside the box, and to avoid human error. First, he soundproofed it, then he installed a mechanical device to record the rat’s responses, and lastly, he automated the food dispenser.

Skinner’s research focused on the experimental study of behaviour. The underlying assumptions about the research in Skinner’s experiments include:

• The lawful relationships between behaviour and environment can be only found only if behavioural properties and experimental conditions are carefully studied

• Data from experimental study of behaviour are the only acceptable sources of information about the causes of behaviour.

The Laws of Conditioning

Skinner proposed two laws that govern the conditioning of an operant:

• The Law of Conditioning: If the occurrence of an operant is followed by presentation of a reinforcing stimulus, the strength is increased.

• The Law of Extinction: If the occurrence of an operant already strengthened through conditioning is not followed by the reinforcing stimulus, the strength is decreased.

The Learning Process

The procedure suggested in operant conditioning is indirectly based on Thorndike’s Law of Effect and Pavlov classical conditioning.

The simple behaviour learning process is based on connectionism, holds –The response R which is closely associated with a stimulus S and the conjunction of the two is reinforced by primary need reduction, there is greater possibility of that S-R to recur on later occasions. That means the strength of the connection is directly proportional to need reduction.

The complex behaviour learning process is based on classical conditioning, holds-The stimulus S which is reinforced by primary need reduction acquire itself the power to reinforce any other contiguous or immediately antecedent stimulus, and the chain goes on infinitely.

S1—————-R1

S2-S1————-R1

S2——————R1

S3-S2————R1

S3——————R1

And goes on

Components of Operant Conditioning

Some key concepts in operant conditioning:

Responses

Types of Responses:

1. Elicited by known stimuli:

Respondent behaviour which includes all reflexes. Examples are jerking one’s hand when jabbed with a pin, construction of the pupils on account of bright light, salivation in the presence of food.

Stimulus preceding the response is responsible for causing the behaviour.

2. Emitted by the unknown stimuli:

Operant behaviour which includes the arbitrary movements. Examples are Movement of one’s hand, arms or legs, A child abandoning one toy in favour of another, eating a meal, writing a letter standing up and walking about, similar other everyday activities.

Stimulus is unknown & knowledge of the cause of the behaviour is not important.

Reinforcement

Generally there seems quite confusion about the relationship of Conditioning and Reinforcement. “Conditioning” is so called because it result in formation of conditioned responses. A conditioned response is a response which is associated with, or evoked by a new-conditioned-stimulus. Conditioning implies a principle of adhesion; one stimulus and response is attached to another stimulus or response so that revival of the first evokes the second.

Reinforcement is a special kind or aspect of conditioning with in which the tendency for a stimulus to evoke a response on subsequent occasion is increased by reduction of a need or of a drive stimulus. A “need” as used here is an objective, biological requirement of an organism which must be met if the organism is to survive and grow. A ‘drive stimulus” is an aroused state of an organism. I t is closely related to the needs which sets an organism into action and may be defined as a strong persistent stimulus which demands an adjusting response. When an organism is deprived of satisfaction of a need, drive stimuli occur.

Schedules of Reinforcement

There are two types of reinforcement schedules – continuous, and partial/intermittent (four subtypes of partial schedules)

A) Fixed Ratio (FR) – reinforcement given after every N the responses, where N is the size of the ratio (i.e., a certain number of responses have to occur before getting reinforcement).

b) Variable Ratio (VR) – the variable ration schedule is the same as the FR except that the ratio varies, and is not stable like the FR schedule. Reinforcement is given after every N the response, but N is an average.

c) Fixed Interval (FI) – a designated amount of time must pass, and then a certain response must be made in order to get reinforcement.

d) Variable Interval (VI) – same as FI but now the time interval varies.

The concept of reinforcement is identical to the presentation of a reward

Reinforcement is any event that strengthens or increases the behaviour it follows. Reinforcement is the process of strengthening behaviour through the use of rewarding consequences.

Skinner identified two types of reinforcing events – those in which a reward is given; and those in which something bad is removed. In either case, the point of reinforcement is to increase the frequency or probability of a response occurring again.

Kinds of reinforcement:

Positive Reinforcement

In Positive Reinforcement a particular behaviour is strengthened by the consequence of experiencing a positive condition. Positive reinforcement – give an organism a pleasant stimulus when the operant response is made. For example, a rat presses the lever (operant response) and it receives a treat (positive reinforcement)

Negative reinforcement

Negative reinforcement – take away an unpleasant stimulus when the operant response is made. For example, stop shocking a rat when it presses the lever.

Generally people use the term “negative reinforcement” incorrectly. It is NOT a method of increasing the chances an organism will behave in a bad way. It is a method of rewarding the behaviour you want to increase. It is a good thing – not a bad thing!

In Negative Reinforcement a particular behavior is strengthened by the consequence of stopping or avoiding a negative condition. . Positive Reinforcement uses rewarding stimuli, while Negative Reinforcement removes the aversive stimuli, to strengthen behaviors

Reinforcer:

A reinforcer is the stimulus the presentation or removal of which increases the probability of a response being repeated.

Kinds of rein forcers:

There are two kinds of rein forcers:

Positive Reinforcers:

Stimulus that naturally strengthens any response that precedes it (e.g., food, water, sex) without the need for any learning on the part of the organism. These reinforcers are naturally reinforcing Positive rein forcer is any stimulus the introduction or presentation of which increases the likelihood of a particular behaviour

Positive rein forcers are favourable events or outcomes that are presented after the behavior. It gives an organism a pleasant stimulus when the operant response is made. A response or behavior is strengthened by the addition of something, such as praise or a direct reward

Negative Reinforcers

A previously neutral stimulus that acquires the ability to strengthen responses because the stimulus has been paired with a primary reinforcer. For example, an organism may become conditioned to the sound of food dispenser, which occurs after the operant response is made. Thus, the sound of the food dispenser becomes reinforcing. Notice the similarity to Classical Conditioning, with the exception that the behaviour is voluntary and occurs before the presentation of a reinforcer.

Negative reinforcer is any stimulus the removal or withdrawal of which increases the likelihood of a particular behaviour. Educational context example is Teachers’ saying to the students that whoever does drill work properly in the class would be exempted from homework, Scolding students. Negative reinforcers involve the removal of an unfavourable events or outcomes after the display of a behaviour. In these situations, a response is strengthened by the removal of something considered unpleasant.

In both of these cases of reinforcement, the behaviour increases.

Punishment

In Punishment a particular behaviour is weakened by the consequence of experiencing a negative condition. Punishment, on the other hand, is the presentation of an adverse event or outcome that causes a decrease in the behaviour it follows.

The process in which a behaviour is weakened, and thus, less likely to happen again. Consequences which are not reinforcing or do not strengthen behaviour and aims at reducing behaviours by imposing unwelcome consequences.

Skinner did not believe that punishment was as powerful a form of control as reinforcement, even though it is the very commonly used. Thus, it is not truly the opposite of reinforcement like he originally thought and the effects are normally short-lived.

Types of punishment:

Positive punishment, – sometimes referred to as punishment by application, involves the presentation of an unfavourable event or outcome in order to weaken the response it follows.

Reducing a behaviour by presenting an unpleasant stimulus when the behaviour occurs. If the rat previously pressed the lever and received food and now receives a shock, the rat will learn not to press the lever.

Presentation of an aversive stimulus to decrease the probability of an operant response occurring again. For example, a child reaches for a cookie before dinner, and you slap his hand.

Negative punishment- also known as punishment by removal, occurs when an favourable event or outcome is removed after a behaviour occurs Reducing a behaviour by removing a pleasant stimulus when the behaviour occurs. If the rat was previously given food for each lever press, but now receives food consistently when not pressing the lever (and not when it presses the lever), the rat will learn to stop pressing the lever.

In both of these cases of punishment, the behaviour decreases.

Reinforcements and Punishments

Whereas reinforcement increases the probability of a response occurring again, the premise of punishment is to decrease the frequency or probability of a response occurring again because learning take time in operant conditioning, reinforcement, develops approximations of the desired behaviour. Punishment, on the other hand, is the process of weakening or extinguishing behaviour through the use of aversive (or undesirable) consequences.

Reinforcements and punishments are categorized as positive/negative, primary/secondary and partial/continuous. Positive Reinforcement uses rewarding stimuli, while Negative Reinforcement removes the aversive stimuli, to strengthen behaviours. On the other hand, Positive Punishment uses aversive stimuli, while Negative Punishment removes rewarding stimuli, to weaken behaviors. Primary Reinforcements use rewarding stimuli that are innately satisfying, such as food, water and sex, while Secondary Reinforcements use rewarding stimuli that are learned (or conditioned), such as eye contact, a pat in the back or a smile. Token reinforcers, like money, for instance, may be exchanged for another reinforcing stimuli. On the other hand, Primary Punishments use aversive stimuli that are innately punishing, such as painful objects and poisonous substances, while Secondary Punishments use aversive stimuli that are learned (or conditioned), such as loss of trust and angry look from other people. Continuous Reinforcements use rewarding consequences all the time, while Partial Reinforcements use rewarding consequences only a portion of the time, in order to successfully establish association. Just the same, Continuous Punishments use aversive consequences all the time, while Partial Punishments use aversive consequences only a portion of the time, in order to successfully establish association.

Learning principles

Shaping

Shaping refers “the reinforcement of successive approximations to a goal behaviour”. This process requires the learner to perform successive approximations of the target behaviour by changing the criterion behaviour for reinforcement to become more and more like the final performance. In other words, the desired behaviour is reinforced each time only approximates the target behaviour.

The most general technique is called shaping, a process of reinforcing each form of the behaviour that more closely resembles the final version. It is used when students cannot perform the final version and are not helped by prompting. Shaping involves gradually changing the response criterion for reinforcement in the direction of the target behaviour.

Shaping is operant conditioning method for creating an entirely new behaviour by using rewards to guide an organism toward a desired behaviour (called Successive Approximations). In doing so, the organism is rewarded with each small advancement in the right direction. Once one appropriate behaviour is made and rewarded, the organism is not reinforced again until they make a further advancement, then another and another until the organism is only rewarded once the entire behaviour is performed. Technique of reinforcement used to teach new behaviours. At the beginning, people/animals are reinforced for easy tasks, and then increasingly need to perform more difficult tasks in order to receive reinforcement For Example, to get a rat to learn how to press a lever; the experimenter will use small rewards after each behaviour that brings the rat toward pressing the lever. So, the rat is placed in the box. When it takes a step toward the lever, the experimenter will reinforce the behaviour by presenting food or water in the dish (located next to or under the lever). Then, when the rat makes any additional behaviour toward the lever, like standing in front of the lever, it is given reinforcement (note that the rat will no longer get a reward for just taking a single step in the direction of the lever). This continues until the rat reliably goes to the lever and presses it to receive reward.

Fading

Behaviours are acquired and exhibited because they are reinforced; non-reinforced behaviours tend not to occur. Individuals are clearly able to distinguish between settings in which certain behaviours will or will not be reinforced. The concept of fading refers to “the fading out of discriminative stimulus used to initially establish a desired behaviour” (Driscoll, 2000). The desired behaviour continues to be reinforced as the discriminative cues are gradually withdrawn.

Extinction

In Extinction a particular behaviour is weakened by the consequence of not experiencing a positive condition or stopping a negative condition. The elimination of the behaviour by stopping reinforcement of the behavior. For example, a rat that received food when pressing a bar, receives food no longer, will gradually decrease the amount of lever presses until the rat eventually stops lever pressing.

Generalization

In generalization, a behaviour may be performed in more than one situation. For example, the rat who receives food by pressing one lever may press a second lever in the cage in hopes that it will receive food.

Discrimination

Learning that a behaviour will be rewarded in one situation, but not another. For example, the rat does not receive food from the second lever and realizes that by pressing the first lever only, he will receive food.

Chaining

The most complete acts are infecting a sequence o movements in which the Nth segment provides feedback stimuli (external and internal) which become discriminative for the next segment of the response. Thus the act may be thought of as a chain of small S-R Units.

Skinner also provided the explanation of the mechanism underlying the nature of complex learning. He proposed that the acquisition of complex behaviours is the result of the process referred to as chaining. Chaining establishes “complex behaviours made up of discrete, simpler behaviours already known to the learner”

Skinner’s position on typical Problems of Learning.

- Capacity- Skinner argues against the usefulness of a trait description in studying individual differences’ trait name does not refer to any unit of behaviour suitable for study through the functional analysis that he recommends. Thus Skinner would reject most ‘ personality tests’ saying that they provide useless characterisations of the person .Intelligence tests might be useful for educational decisions, since they sample directly the problem solving skills they purport to measure, but the tests do not tell us how to remedy specific educational disabilities.

- Practice- Sometimes like a simple law of exercise is accepted for Type S conditioning. The conditioning that occurs under Type R depends upon repeated reinforcement. The possibility is favoured that maximum reinforcement may occur in a single trial for the single operant, but the single operant is difficult to achieve experimentally. Usually, the accumulation of strength with repeated reinforcement depends upon a population of discriminated stimuli and a chain of related operant.

- Motivation-Reinforcement is necessary to increase operant strength. Punishment has a diverse range of effects, although typically it suppresses the response. Internal drives are viewed as relatively useless explanatory constructs, similar to personality traits. Skinner recognizes the effects of explicit deprivation variables on strength of operant’s reinforced by that restricted commodity, but he claims that nothing is added to a functional analysis by talking about a ‘ drive’ intervening between deprivation operations and changes in strength of operant responses.

- Understanding- The word insight does not occur in Skinner’s writings. The emergence of the solution is to be explained on the basis of (1) similarity of the present problem to one solved earlier or (2) the simplicity of the problem. The technique of problem-solving is essentially that of manipulating variables which lead to emission of the response. It is possible to teach people to ‘think’ or ‘to creative ‘by these methods.

- Transfer-Skinner used the word induction for what is commonly called generalization. Such induction is the basis for transfer. He recognizes both primary and ‘secondary ‘or ‘mediated’ generalization. The reinforcement of a response increases the probability of that response or similar ones to all stimulus complexes containing the same elements. Included is feedback simulation from verbal labels; thus ,an overt response will occur to a novel object if some property of it controls a verbal label which in turn controls the overt response.

Educational Applications of Operant Conditioning

As a strong supporter of Programmed instruction and Teaching machine, Skinner thought that our education system was ineffective. He suggested that one teacher in a classroom could not teach many students adequately when each child learns at a different rate. He proposed using teaching machines (what we now call computers) that would allow each student to move at their own pace. The teaching machine would provide self-paced learning that gave immediate feedback, immediate reinforcement, identification of problem areas, etc. that a teacher could not possibly provide.

Operant conditioning is a vehicle for teachers to achieve behavior modification in order to improve classroom management and facilitate learning. There are three techniques employed in particular to facilitate learning: prompting, chaining, and shaping. Prompting involves giving students cues (called discriminative stimuli in the lexicon of operant conditioning) to help them perform a particular behavior. When students are learning to read, a teacher may help them by sounding out a word (just as when actors forget their lines, someone prompts them by saying their next line). Prompting helps to make the unfamiliar become more familiar, but, if used too often, students can become dependent on it, so teachers should withdraw prompts as soon as adequate student performance is obtained (a process called fading). Also, teachers should be careful not to begin prompting students until students try a performing task without extra help.

Learning complex behaviours can also be facilitated through an operant conditioning technique called chaining, a technique for connecting simple responses in sequence to form a more complex response that would be difficult to learn all at one time. Each cue or discriminative stimulus leads to a response that then cues the subsequent behaviour, enabling behaviours to be chained together

The most general technique is called shaping, a process of reinforcing each form of the behaviour that more closely resembles the final version. It is used when students cannot perform the final version and are not helped by prompting. Shaping involves gradually changing the response criterion for reinforcement in the direction of the target behaviour.